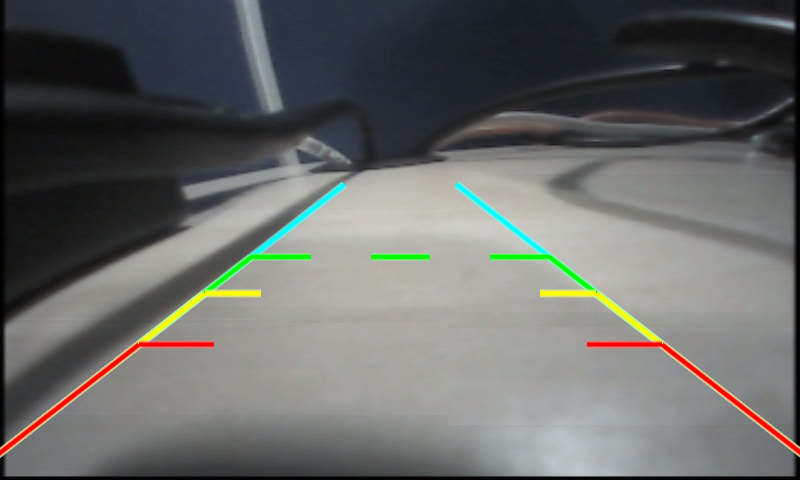

Wikipedia definition: a visual artifact in video display where a display device shows information from multiple frames in a single screen draw.

If tearing artifact is seen when displaying a captured stream (e.g. camera input), there could be a few reasons:

1. A partial frame was captured and displayed.

In this case, what being displayed is a combination of an old frame and a new partial frame. Visually, it would be a or more independent flickers.

The frequency of tearing would depend on: the number of buffers being used for capture; the frequency of partial frames.

2. The frames are being displayed out of order. — should be fixed in application

This is the most simple reason. This can be verified by checking if the sequence number of the frames being displayed/posted are still in order (assuming the frames are captured with a unique seqno given, respectively).

Visually, this would look the entire frame is pulling back and forth, not only one part of it; even it could be some kind of tearing, it is due to the display refreshing behavior. e.g. if the display refreshes from left to right, the lines which are being refreshed would look somehow tearing effect.

3. the composition rate or display rate is slower than expected.

In this case, a buffer is returned to capture hardware for new frame, while it is still being displayed.

Diagnosis: in crease the number of buffers. the artifact disappears; or increase the number of buffers hold by the application, the artifact will disappear.

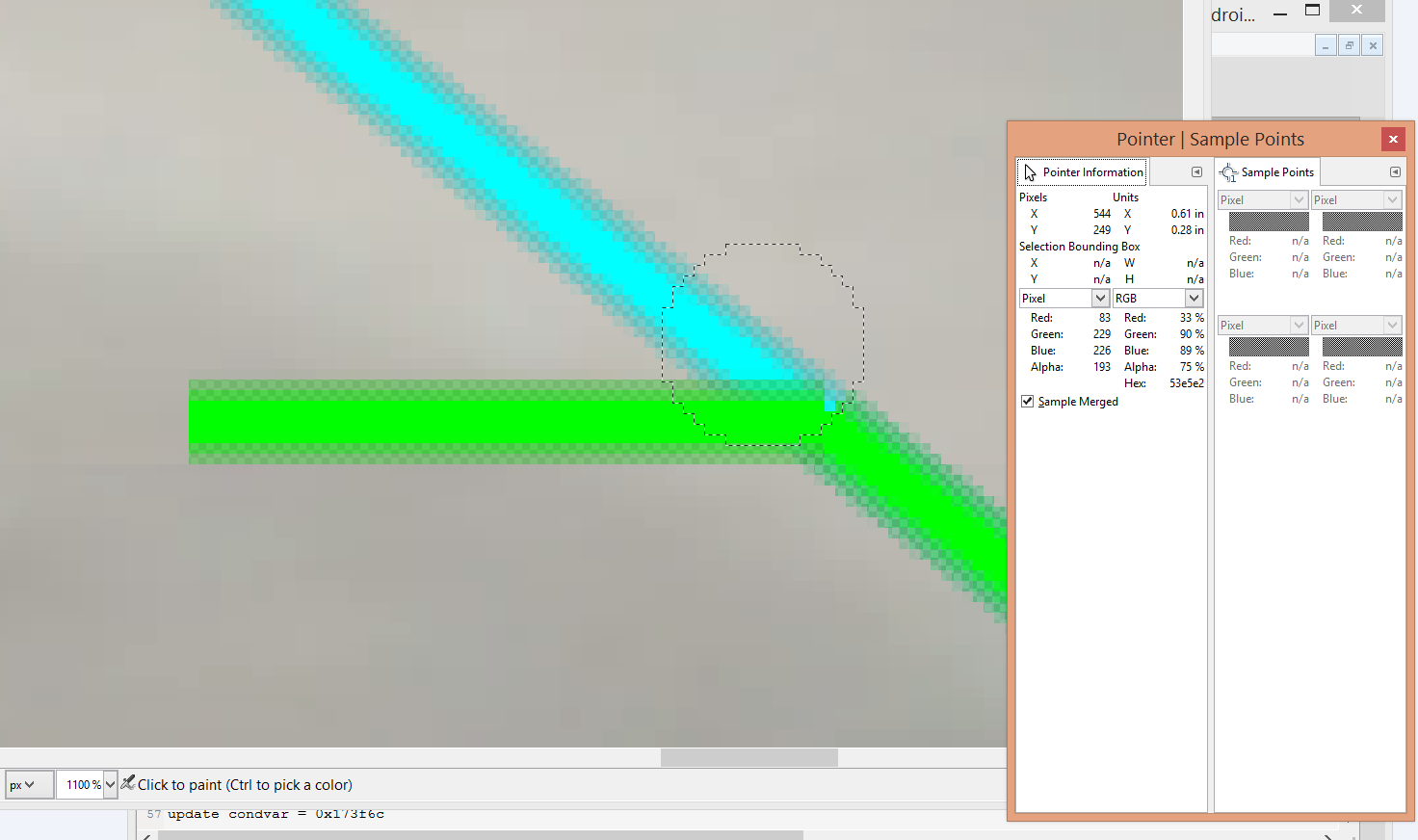

4. capture hardware continues to write into a buffer, while it should not write.

in this case, the capture software is out of sync with capture hardware: the software considers a buffer is done and hands it over to display; the hardware keeps writing into it.

Visually, this will look like #3: constant tearing, or even ghosting effect.

Diagnosis: increasing the number of buffers will make it worse.